n8n Database Integration Patterns for SaaS Applications

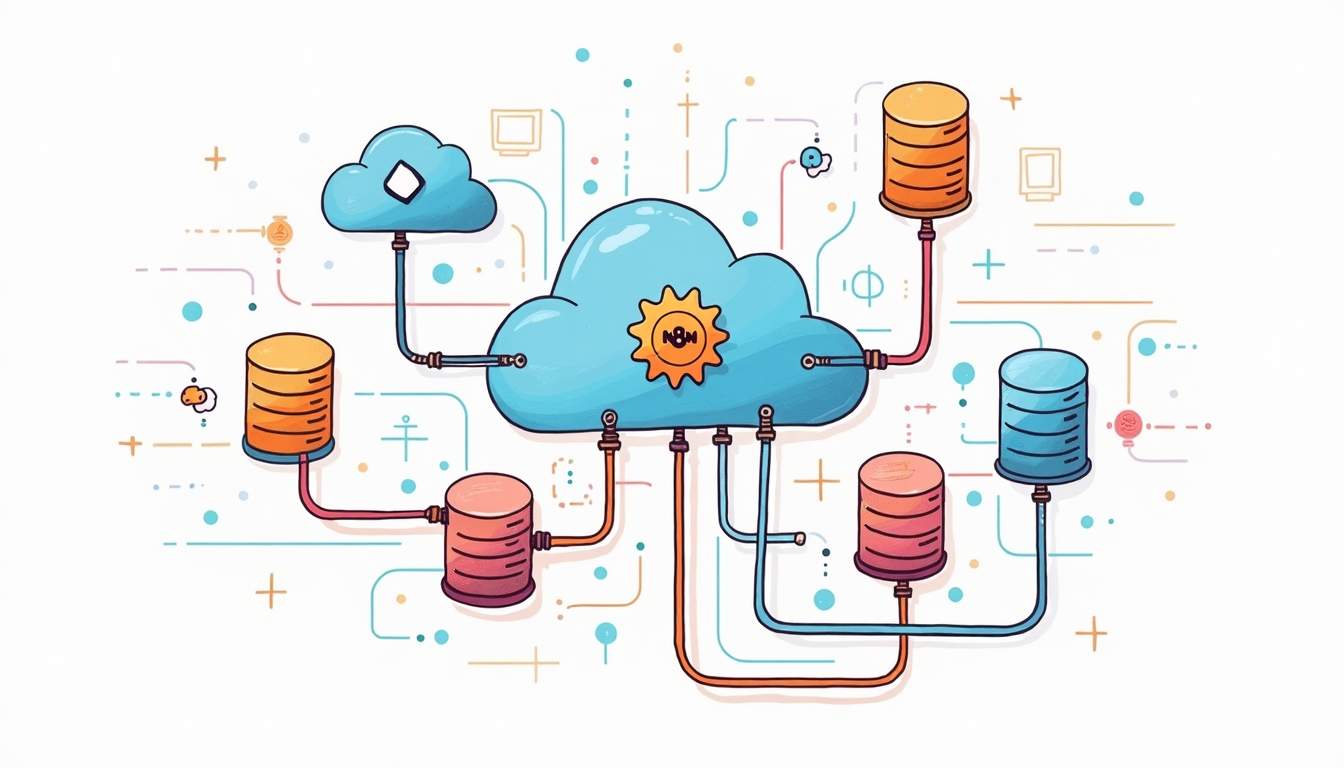

This article explores practical integration patterns using n8n to connect PostgreSQL and MongoDB for SaaS applications, focusing on workflow optimization and real-time synchronization strategies. Coverage includes architectural patterns, performance considerations, error handling, and example flows that can be adapted for multi-tenant systems, analytics pipelines, and event-driven features.

Observability and testing are equally important: instrument workflows with structured logging, metrics, and traces so you can correlate n8n runs with database load and user-facing incidents. Emit metrics for processed record counts, error rates, and processing latency to a monitoring stack (Prometheus/Grafana, Datadog). Capture structured error details and sample payloads (sanitized of PII) to speed debugging. Implement end-to-end tests that run against staging databases — validate upserts, conflict-resolution logic, and rollback behavior — and include chaos scenarios such as transient DB connectivity loss or duplicate events to ensure your idempotency and retry strategies are robust.

Finally, plan for schema evolution and change-data-capture (CDC) patterns as your product matures. Lightweight migrations that add nullable fields or new collections are straightforward, but breaking changes require coordinated rollouts between PostgreSQL schemas, MongoDB document shapes, and n8n transformation logic. Consider CDC tools (logical decoding, Debezium, or cloud-native change streams) to drive near-real-time incremental syncs with lower latency and better fidelity than polling updated_at fields. Coupling CDC with feature flags and phased backfills lets you evolve data models without service disruption, while n8n can orchestrate validation, backfill jobs, and progressive cutovers between canonical sources and read-optimized stores.

Operationalizing this architecture also requires disciplined testing and deployment practices. Create integration tests that simulate event streams, including out-of-order delivery, duplicate events, and schema changes, to validate idempotency and error-handling logic in n8n workflows. Run load tests against the entire pipeline (PostgreSQL -> broker -> n8n -> MongoDB) to uncover bottlenecks and verify that backpressure mechanisms behave as expected. Automate schema migrations and event-schema changes through CI/CD pipelines, and include contract tests between producers and consumers so that a schema change in PostgreSQL or a transformation in n8n doesn’t silently break downstream services.

Finally, consider operational costs and maintainability: managed services (Kafka-as-a-service, managed Debezium connectors, cloud Pub/Sub) can reduce operational burden but introduce vendor lock-in and billing variability. For smaller teams, favor simpler, more observable designs—fewer moving parts, clear retry semantics, and comprehensive logging—so that incidents can be diagnosed quickly. Prioritize idempotent handlers, rate-limited consumers, and dead-lettering strategies to keep the system resilient as load and feature complexity grow.