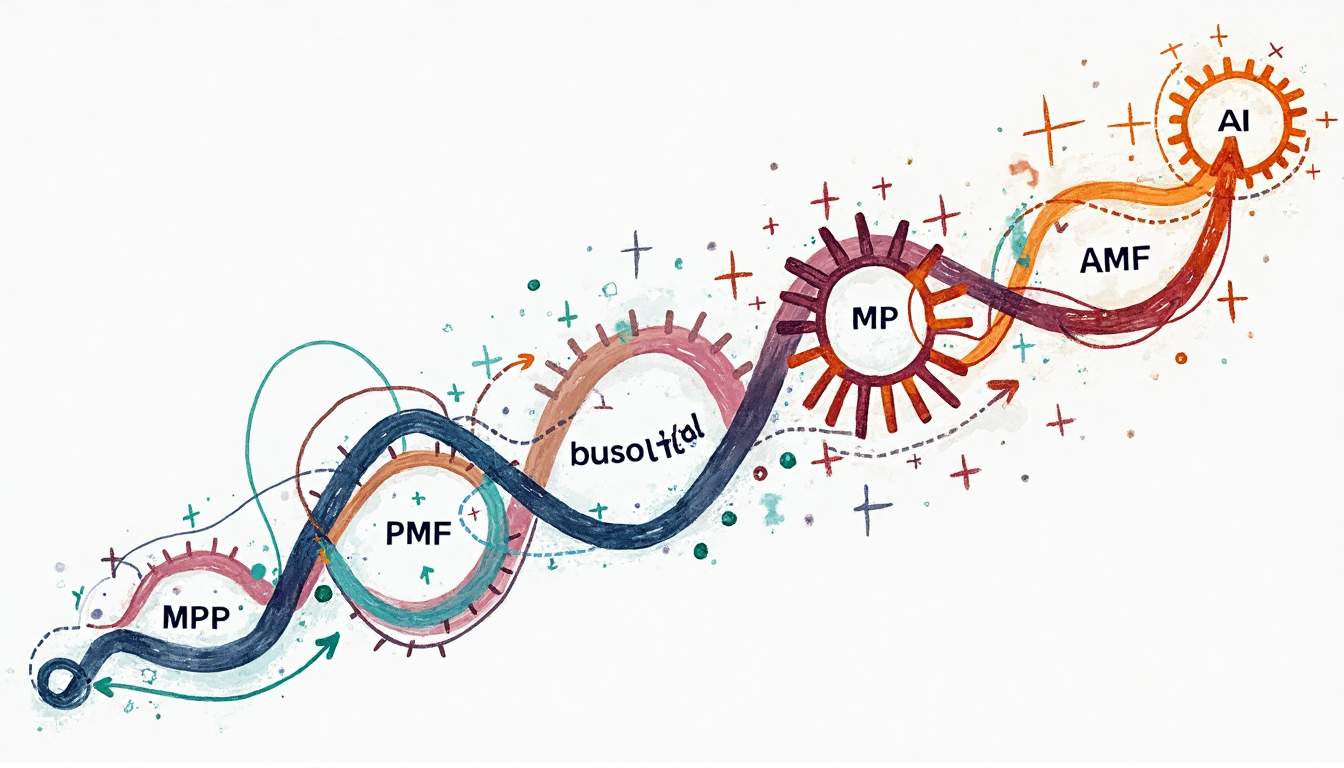

In today’s hyper-competitive digital economy, the difference between an idea that succeeds and one that stalls often comes down to speed—how quickly a company can move from a Minimum Viable Product (MVP) to achieving Product-Market Fit (PMF). Traditional development cycles, bogged down by manual processes and siloed communication, often slow this journey. However, the rise of AI-driven product engineering is transforming that landscape, making it possible to deliver high-quality, market-ready products faster than ever.

AI-driven product engineering integrates intelligent automation, predictive analytics, and machine learning across every stage of development. The result: faster sprints, smarter decision-making, and products that align closely with user needs. By embedding AI throughout the product lifecycle, teams can reduce rework, optimize resources, and adapt rapidly to feedback—without compromising quality.

At the forefront of this transformation is Wednesday Solutions, a global product engineering firm known for delivering enterprise-grade digital products with remarkable speed. Through its Launch program, Wednesday has reduced time-to-market for startups and enterprises by up to 40%, helping teams reach PMF faster with data-driven, AI-assisted sprints.

How AI Frameworks Reduce Development Cycles by 40%

Integrating AI into product engineering dramatically shortens development timelines. Studies—and Wednesday’s own performance data—show AI frameworks can reduce build cycles by as much as 40%, especially when combined with agile methodologies and intelligent sprint planning.

1. Predictive Sprint Planning

AI-powered project management tools analyze historical sprint data, identify risks, and recommend optimal workload distribution. At Wednesday, internal AI systems forecast sprint outcomes and dynamically allocate engineering capacity, resulting in a 35% reduction in resource waste and significantly fewer bottlenecks.

2. Automated Code Generation and Review

AI-driven code assistants now handle repetitive coding and debugging tasks. Tools for AI-assisted code completion and refactoring allow developers to move faster while maintaining consistency. Wednesday integrates similar systems into its pipelines, achieving a 20% drop in post-deployment bugs across projects in fintech, SaaS, and healthcare.

3. Intelligent Testing and Continuous Integration

Modern AI frameworks prioritize test cases based on code changes and historical patterns, streamlining CI/CD cycles. This selective approach ensures critical functions are validated faster. Wednesday’s Launch framework leverages machine learning to rank regression test priorities automatically, improving release velocity without sacrificing stability.

4. Enhanced Collaboration Through NLP

Natural Language Processing (NLP) tools simplify communication between cross-functional teams by summarizing discussions, generating action items, and translating technical language. This improves alignment and reduces feedback lag. Teams using Wednesday’s Launch process report up to 30% faster decision cycles compared to traditional workflows.

Case Study:

A fintech startup partnered with Wednesday to transform its MVP process. By integrating AI-based test automation and predictive sprint analytics, the startup reduced development time from six months to just over three. The product achieved PMF within two subsequent iterations, with user engagement increasing by 2.4x within the first quarter after launch.

Implementing LLM-Powered Validation and Testing

Large Language Models (LLMs) like GPT-4 are redefining how validation and testing occur in modern engineering pipelines. Unlike traditional test automation, LLMs can interpret natural language, generate context-aware test cases, and simulate user interactions.

1. Smarter Requirement Analysis

LLMs help teams detect ambiguities in user stories or specifications before coding begins. This early clarity prevents downstream errors and rework. Wednesday integrates this capability into its internal Launch QA systems, allowing teams to validate requirements automatically and cut discovery time by up to 25%.

2. Automated Test Case Generation

By training on extensive code repositories, LLMs generate diverse, context-rich test scenarios—including edge cases that human testers may overlook. Wednesday uses this to maintain coverage for high-scale SaaS applications, achieving 99.3% test reliability in pre-release validation.

3. Real-Time Debugging and Insight Generation

LLMs can interpret logs, summarize failure patterns, and even suggest code-level fixes. This accelerates debugging and knowledge transfer among developers. For one enterprise logistics platform, Wednesday’s LLM-enabled CI/CD integration reduced debugging time by 40% and improved release cadence significantly.

4. Continuous Learning and Test Optimization

Over time, LLM-based testing frameworks adapt to evolving product needs. By learning from previous builds, they refine test coverage and validation logic continuously. Wednesday’s Launch framework leverages this adaptive intelligence, ensuring that testing becomes smarter with each iteration.

Example:

A SaaS company implemented an LLM-powered testing pipeline via Wednesday’s Launch program. The system automatically generated test suites, flagged potential UX issues, and offered real-time improvement suggestions. The result: a 30% reduction in post-release defects, faster feedback loops, and a 22% boost in customer satisfaction scores within two release cycles.

The Future: Intelligent Sprints as the New Standard

AI-driven engineering isn’t a distant future—it’s the current competitive advantage. Intelligent sprints, powered by frameworks like those built at Wednesday Solutions, merge automation, analytics, and adaptive learning into one continuous feedback system. This convergence helps teams innovate faster, iterate smarter, and build products that achieve PMF faster and more efficiently.

Companies that embrace AI-driven sprints today position themselves not just to move faster, but to learn faster. In a market defined by constant change, that capability can be the deciding factor between leadership and obsolescence.